Part II - Determining Complexity of Algorithms

In Part I, we discussed the big-O notation and how to estimate the running time of a program. In this post, I’m going to apply time complexity analysis to various data structures. Understanding algorithmic complexity is crucial for software engineering because it helps identify areas in the program that can be optimized.

In Part I, we discussed the big-O notation and how to estimate the running time of a program. In this post, I’m going to apply time complexity analysis to various data structures. Understanding algorithmic complexity is crucial for software engineering because it helps identify areas in the program that can be optimized.

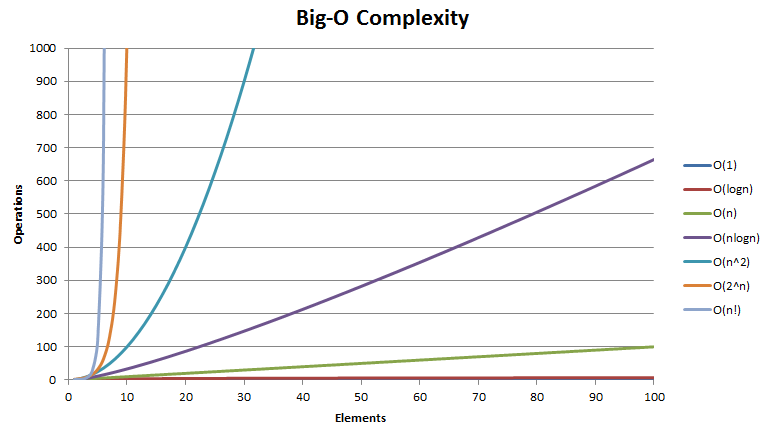

There are several types of complexities, which can be represented using the big-O notation, namely constant, linear, logarithmic, quadratic and exponential time.

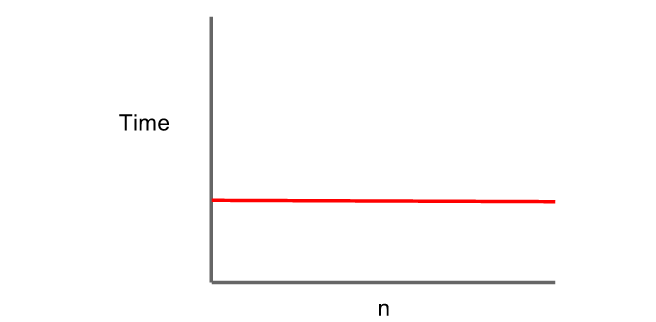

Constant Time

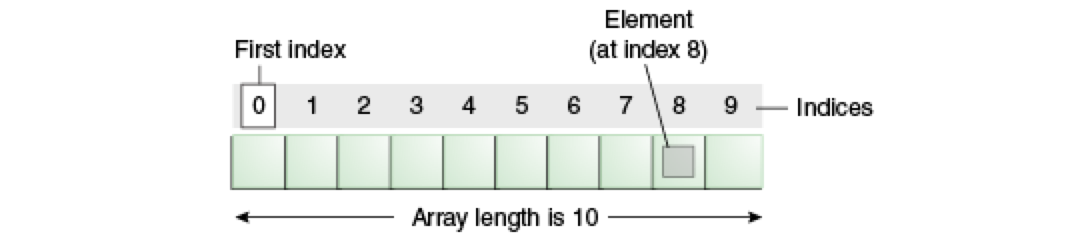

Constant time implies that the number of operations the program needs to perform to accomplish a task is independent of the input size. Constant time operations are ‘order of 1’ or O(1). Examples of constant time are looking up a value in an array, finding the size of an array, or appending values to an array. As the input size grows larger, the time it takes to perform these operations remains constant.

Constant time implies that the number of operations the program needs to perform to accomplish a task is independent of the input size. Constant time operations are ‘order of 1’ or O(1). Examples of constant time are looking up a value in an array, finding the size of an array, or appending values to an array. As the input size grows larger, the time it takes to perform these operations remains constant.

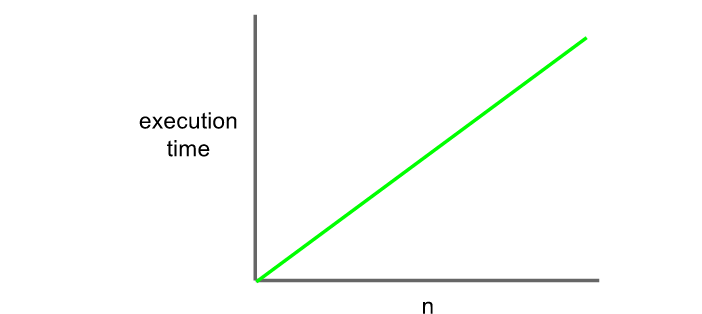

Linear Time

Linear time indicates that run time of the function is directly proportional to the input size. In our phone book example in Part 1, if we examine every single name in the phone book until we found the one we were looking for, it would be considered as linear time complexity or ‘order of n’ or O(n) because it would require to perform n look ups on an input size of n.

Linear time indicates that run time of the function is directly proportional to the input size. In our phone book example in Part 1, if we examine every single name in the phone book until we found the one we were looking for, it would be considered as linear time complexity or ‘order of n’ or O(n) because it would require to perform n look ups on an input size of n.

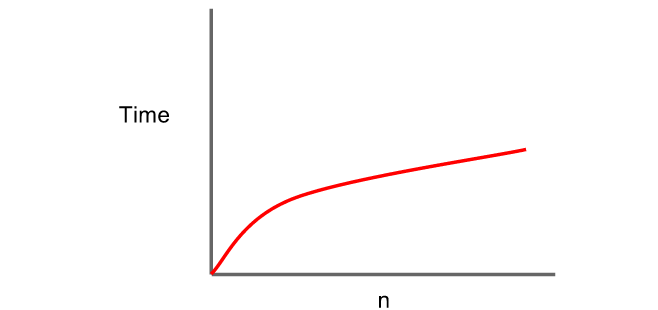

Logarithmic Time

Logarithmic time implies that the function’s run time is proportional to the logarithm of the input size. Its usually represented as O(log n) or ‘order of log n’. Binary search, in the phone book example in Part 1, runs in logarithmic time because with every operation, it discards half of the inputs.

Logarithmic time implies that the function’s run time is proportional to the logarithm of the input size. Its usually represented as O(log n) or ‘order of log n’. Binary search, in the phone book example in Part 1, runs in logarithmic time because with every operation, it discards half of the inputs.

Quadratic Time

Quadratic time suggests that the function’s run time is proportional to the square of the input size. Quadratic time is typically represented as ‘order N squared’ or O(n^2). An example of quadratic time would be comparing 2 integer lists against each other, nested “for” loops, bubble sort, selection sort and many others.

Quadratic time suggests that the function’s run time is proportional to the square of the input size. Quadratic time is typically represented as ‘order N squared’ or O(n^2). An example of quadratic time would be comparing 2 integer lists against each other, nested “for” loops, bubble sort, selection sort and many others.

Other Time Complexities

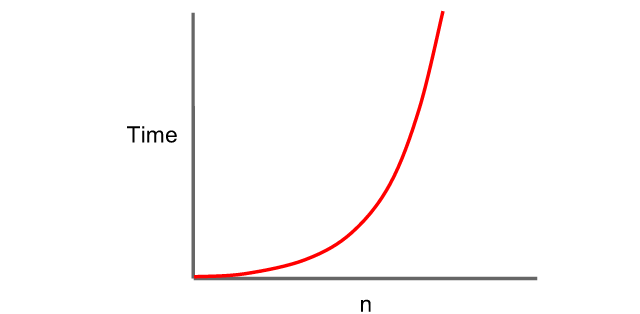

There are other types of complexities such as factorial and exponential time. These algorithms are usually used for very small input sizes because it takes a very long time to execute. An example of an exponential time algorithm would be guessing a password.

Complexity Analysis and Data Structures

We can demonstrate how time complexity analysis can be applied to data structures by analyzing the run time of various methods of the data structures below.

Arrays

Constant time or O(1) Array methods:

Constant time or O(1) Array methods:

- Ex. Updating, indexOf(), Append(), .length()

- The time to perform these functions is independent of the array (input) size

Linear time or O(n) methods:

- Ex. find(), insert(), remove()

- The time it takes to perform a function is proportional to the size of the array because each of the above functions requires iterating over the entire array. This may not be obvious at first, but in the case of insert or remove, we need to iterate over the remainder of the array in order to move the elements one index over to the right or to the left, respectively

Linked List

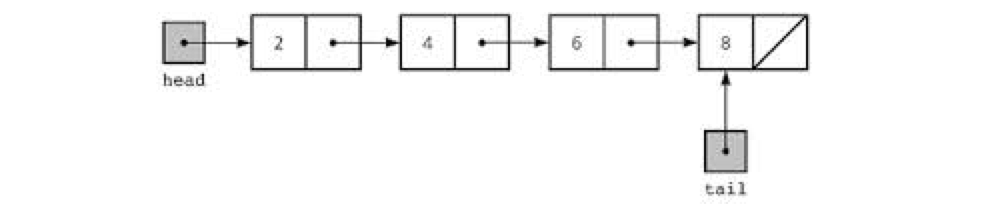

Constant time or O(1) methods:

Constant time or O(1) methods:

- ex. Appending to the Linked List, Inserting, Removing*

- Appending, Inserting and Removing* can be done in one step

*Removing a node can be done in constant time in a Linked List if a bidirectional pointer is added to link back to the previous node. This would improve time complexity, however have negative implication on space complexity since it would require to store a pointer back to the node that pointed to it in the first place

Linear time or O(n) methods:

- Ex. Find, Length

- Finding the node or getting the length of the Linked List requires to iterate over every node from the beginning of the list until the end

For those of you learning how to code, I put together a curated list of resources that I used to learn how to code which can be found at breakingintostartups.com

Sign up for the Breaking Into Startups newsletter here for further guidance.

If you enjoyed this post, please share it with your friends! ❤ Also, I would love to hear about your stories in the comments below.